What is Terraform?

Brett Weir, May 15, 2023

HashiCorp's Terraform is a powerful tool that enables higher-order infrastructure management across tools and providers. It does this by:

-

Providing a standardized, declarative front end for remote APIs

-

Supporting gradual change, rollback, and disaster recovery

-

Making your infrastructure self-documenting

-

Supporting immutable infrastructure deployment patterns

That all sounds great, but what does any of that mean? To understand Terraform, I find it best to understand what it replaces.

What about ...

Let's say you have a server somewhere. You need to put stuff on it. How should you approach it?

... hand edits?

The easiest possible solution is to hack away. They have a UI for a reason, right? Log right in, tweak the config, install some things, add your SSH keys, and away you go. This will work famously, and potentially for a very long time. That is, of course, until it doesn't.

Cracks will start to show sooner or later. Someone will ask you to change something. Your app will get more users. You'll need to migrate something to somewhere. You'll hand off some work to the new team member. The server's OS reaches end-of-life. Over time, these miscellaneous changes accumulate and are then forgotten as the team moves on to new work. This is called configuration drift, and it is ruinous because it is both catastrophic and inevitable. Any server that sits there long enough will eventually succumb to this fate.

... change orders?

One solution is to neurotically control and gate all changes to systems, requiring operators to fill out paperwork and get changes approved and signed off by multiple people.

This "helps" to prevent changes by demoralizing the team and making them resistant to working on or improving the system at all. If, despite that, someone does eventually try to make a change, then it does little to prevent erroneous or incidental changes.

... scripts?

Another solution is writing a script to do the deployment. This is a stunning advancement in practice, as it acknowledges that making changes yourself is probably a bad idea. It also helps you remember how you did something, why, and lets you do it again if needed.

A problem you quickly discover with this approach is the pleasure of coding for all possible circumstances. Writing a script to install a package? Okay, great. What should I do if the package is already installed? Should I install the newer version? Remove and reinstall? Purge existing configuration associated with the package? What if there's a conflicting package?

Coding for every eventuality on a long-lived system is nearly impossible.

Another problem is that, you're still running your script on a live, existing server, which means your install script isn't the only thing potentially making changes. Your system auto updater is also making changes, and so are the support techies who are panic fixing the bug that got introduced. By extension, there's no great way to roll back your changes, because there is no well-defined answer to knowing what changes have actually been made. Sure, your can restore the image from a backup, but what exactly does that do (or undo)?

... configuration management?

Configuration management (CM) tools like Chef, Puppet, and Ansible have their uses. In many ways, the benefits and drawbacks of CM tools are the same as for writing scripts.

They bring extra features to the table to support managing multiple machines, and come equipped with packages and modules to make coding idempotent changes easier. They still suffer from incomplete knowledge of system state, and you still gotta code for the great many situations that you may run into while provisioning.

So how do you manage a server over its lifetime?

It's a trick question: you don't.

Enter Terraform

Terraform is a different kind of tool entirely. It's a configuration management tool, but it approaches things so differently that it's really in a class of its own.

Terraform abandons the idea that things should be long-lived at all. It treats things it manages as immutable black boxes. You might be able to change a label or a tag, but for any change that might change the nature of a resource, like installing package or changing certain parameters, Terraform prefers to just delete it and start over.

What?!

This is a big departure from endlessly tweaking systems forever. Now, instead of operators getting mired in a sea of patches and updates and configs, resources become atomic building blocks that can be assembled and reassembled as needed.

To demonstrate how Terraform works, we'll deploy a DigitalOcean droplet.

Project setup

Terraform projects are written in their own configuration

language, and Terraform

files end in .tf. Terraform syntax is declarative, so ordering doesn't matter,

and resources are defined at a directory, or

"module", level,

so you can spread your resources out across multiple .tf files in a directory,

and name them whatever you like.

Create a directory called whatever you like, and add main.tf and

terraform.tf files:

mkdir droplet-deployment/

cd droplet-deployment/

touch main.tf terraform.tfFinding providers

The next thing to do is find a provider.

A provider is a plugin that wraps an API so that it can be modeled declaratively using HCL code. Companies, organizations, and individuals maintain Terraform providers because they understand the value that Terraform provides, and know that many developers will refuse to integrate services that don't offer Terraform providers. :smile:

We can search the Terraform Registry, where we

find the digitalocean/digitalocean

provider,

maintained by none other than DigitalOcean themselves. Let's add it to the

terraform.tf file:

# terraform.tf

terraform {

required_providers {

digitalocean = {

source = "digitalocean/digitalocean"

version = "~> 2.28"

}

}

}We can add a credential in whatever way desired to connect to our DigitalOcean account. I prefer environment variables:

export DIGITALOCEAN_TOKEN="dop_v1_XXXXXXXXXXXXXXXX"Run terraform init to download the providers and configure your state:

terraform init$ terraform init

Initializing the backend...

Initializing provider plugins...

- Finding digitalocean/digitalocean versions matching "~> 2.28"...

- Installing digitalocean/digitalocean v2.28.1...

- Installed digitalocean/digitalocean v2.28.1 (signed by a HashiCorp partner, key ID F82037E524B9C0E8)

...

Terraform has been successfully initialized!

...Then we can then declare our droplet resource according to the example:

# main.tf

resource "digitalocean_droplet" "app" {

image = "ubuntu-22-04-x64"

name = "app"

region = "sfo3"

size = "s-1vcpu-1gb"

}We haven't created anything yet though. That comes next.

Making plans

Terraform has an excellent view of the systems that it manages. It knows:

-

The actual state of the system, by querying the target API,

-

The expected state of the system, by reading the state file, and

-

The desired state of the system, by reading the HCL files in your repository.

Through all these views of the system, Terraform is able to build a directed graph of changes required to achieve the desired state. This is known, in Terraform jargon, as a "plan".

After writing out your HCL code, you can create a plan using the terraform plan command:

terraform planOn running the plan, Terraform will tell you exactly what changes it intends to make, so you can review them before Terraform does anything:

$ terraform plan

Terraform used the selected providers to generate the following execution plan. Resource actions are indicated with the

following symbols:

+ create

Terraform will perform the following actions:

# digitalocean_droplet.app will be created

+ resource "digitalocean_droplet" "app" {

+ backups = false

+ created_at = (known after apply)

+ disk = (known after apply)

+ graceful_shutdown = false

+ id = (known after apply)

+ image = "ubuntu-22-04-x64"

+ ipv4_address = (known after apply)

+ ipv4_address_private = (known after apply)

+ ipv6 = false

+ ipv6_address = (known after apply)

+ locked = (known after apply)

+ memory = (known after apply)

+ monitoring = false

+ name = "app"

+ price_hourly = (known after apply)

+ price_monthly = (known after apply)

+ private_networking = (known after apply)

+ region = "sfo3"

+ resize_disk = true

+ size = "s-1vcpu-1gb"

+ status = (known after apply)

+ urn = (known after apply)

+ vcpus = (known after apply)

+ volume_ids = (known after apply)

+ vpc_uuid = (known after apply)

}

Plan: 1 to add, 0 to change, 0 to destroy.

────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────

Note: You didn't use the -out option to save this plan, so Terraform can't guarantee to take exactly these actions if you run

"terraform apply" now.This is huge. Terraform allows you to see and understand the changes you're about to make before you make them. You know, so you don't do fun things like clobber the database or delete the firewall.

You can visualize your plan with the graph subcommand, which renders Graphviz

code to render into an image:

terraform graph | dot -Tsvg > plan.svg

Here, we see that Terraform will instantiate the DigitalOcean provider and use that to create our droplet.

Applying plans

When you are feeling confident in the changes you're about to make, you can "apply" them, which executes your plan's proposed changes to arrive at the new state.

$ terraform apply

...

...

...

Plan: 1 to add, 0 to change, 0 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

digitalocean_droplet.app: Creating...

digitalocean_droplet.app: Still creating... [10s elapsed]

digitalocean_droplet.app: Still creating... [20s elapsed]

digitalocean_droplet.app: Still creating... [30s elapsed]

digitalocean_droplet.app: Still creating... [40s elapsed]

digitalocean_droplet.app: Creation complete after 42s [id=355390759]

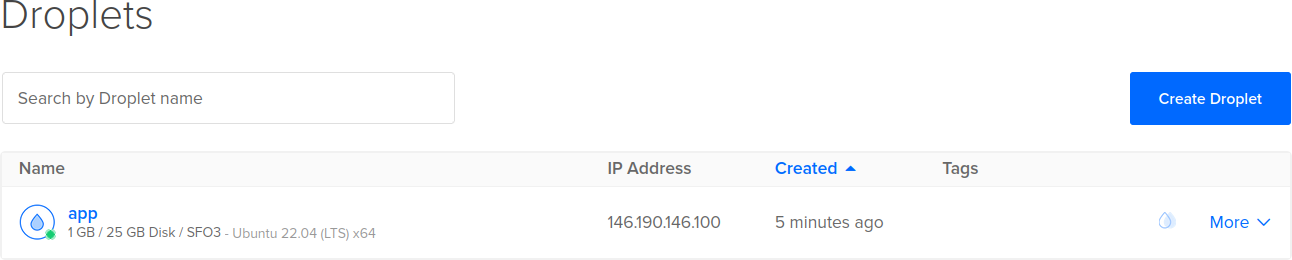

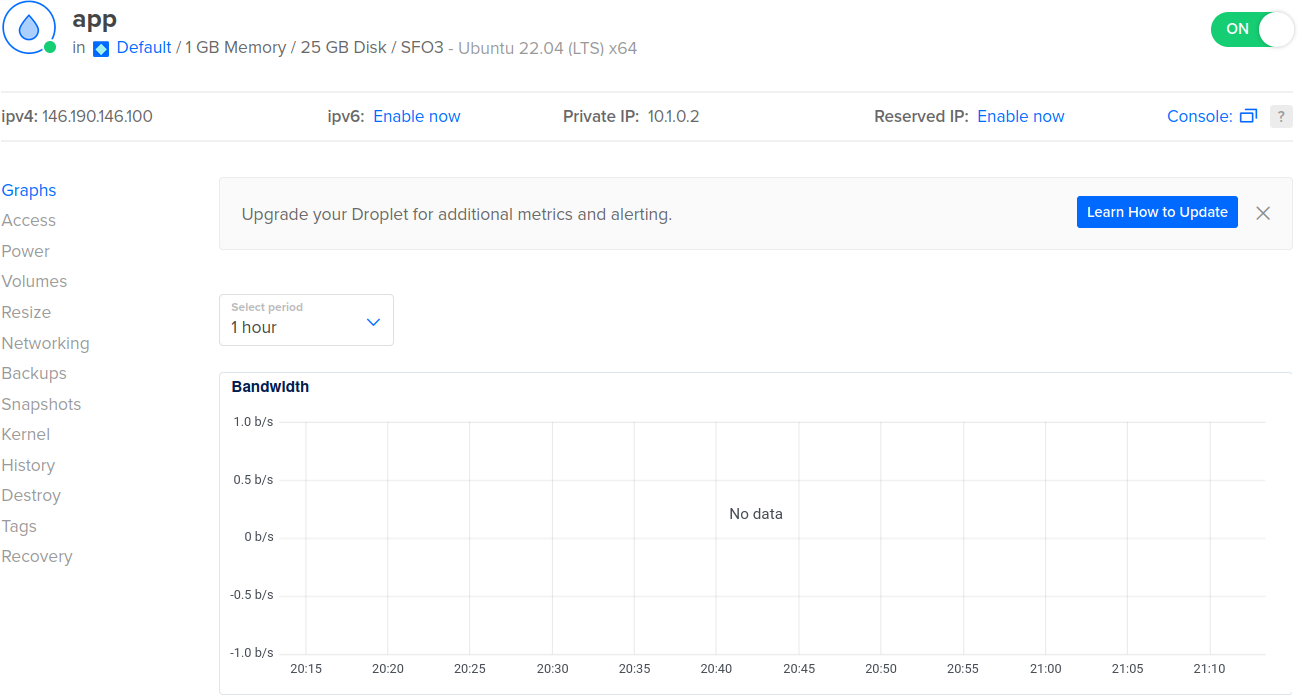

Apply complete! Resources: 1 added, 0 changed, 0 destroyed.If we browse to the DigitalOcean dashboard, the resource is here, it's live, it's ready to go!

Well, not quite ready. I mean, there's no SSH key, no firewall, no monitoring. We'll look at that in the next section.

Making changes

Once you deploy a machine, the first thing you'll want to do is make changes to it, I guarantee you. And isn't that why we're here? :smile:

Here's a fairly obvious and immediate change you'll want to make to this image. It has no SSH key, so there's currently no way at all to access it.

Normally, you might bust out ssh-keygen and have at it, but then you're back

where you started, making hand-edits to things with no way to track it. Instead,

add the hashicorp/tls

provider to

generate SSH keys from your Terraform configuration:

# terraform.tf

terraform {

required_providers {

digitalocean = {

source = "digitalocean/digitalocean"

version = "~> 2.28"

}

tls = {

source = "hashicorp/tls"

version = "4.0.4"

}

}

}Since you added a new provider, you'll need to run terraform init -upgrade:

terraform init -upgrade$ terraform init -upgrade

Initializing the backend...

Initializing provider plugins...

- Finding digitalocean/digitalocean versions matching "~> 2.28"...

- Finding hashicorp/tls versions matching "4.0.4"...

- Using previously-installed digitalocean/digitalocean v2.28.1

- Installing hashicorp/tls v4.0.4...

- Installed hashicorp/tls v4.0.4 (signed by HashiCorp)

Terraform has made some changes to the provider dependency selections recorded

in the .terraform.lock.hcl file. Review those changes and commit them to your

version control system if they represent changes you intended to make.

Terraform has been successfully initialized!

...Then you'll need to add two resources: one to create the key itself, and another to add the key to DigitalOcean. Then modify the droplet resource to add the key to the machine:

# main.tf

resource "tls_private_key" "app" {

algorithm = "ED25519"

}

resource "digitalocean_ssh_key" "app" {

name = "app ssh key"

public_key = tls_private_key.app.public_key_openssh

}

resource "digitalocean_droplet" "app" {

image = "ubuntu-22-04-x64"

name = "app"

region = "sfo3"

size = "s-1vcpu-1gb"

ssh_keys = [digitalocean_ssh_key.app.id]

}At this point, if we run graph, we should see this:

It's not required to run plan before apply, because Terraform will

automatically create a plan anyway if it needs to. Let's just run terraform apply, and examine closely what's going on:

terraform apply$ terraform apply

digitalocean_droplet.app: Refreshing state... [id=355390759]

...

...

...

Plan: 3 to add, 0 to change, 1 to destroy.

Do you want to perform these actions?

Terraform will perform the actions described above.

Only 'yes' will be accepted to approve.

Enter a value: yes

digitalocean_droplet.app: Destroying... [id=355390759]

digitalocean_droplet.app: Still destroying... [id=355390759, 10s elapsed]

digitalocean_droplet.app: Still destroying... [id=355390759, 20s elapsed]

digitalocean_droplet.app: Destruction complete after 21s

tls_private_key.app: Creating...

tls_private_key.app: Creation complete after 0s [id=d680d387c543c08d6d015ad893164bf97a6bf495]

digitalocean_ssh_key.app: Creating...

digitalocean_ssh_key.app: Creation complete after 1s [id=38310647]

digitalocean_droplet.app: Creating...

digitalocean_droplet.app: Still creating... [10s elapsed]

digitalocean_droplet.app: Still creating... [20s elapsed]

digitalocean_droplet.app: Still creating... [30s elapsed]

digitalocean_droplet.app: Still creating... [40s elapsed]

digitalocean_droplet.app: Creation complete after 42s [id=355414489]

Apply complete! Resources: 3 added, 0 changed, 1 destroyed.You and I have added two resources, and modified the existing one, but Terraform is reporting that we're adding three and destroying one. :astonished:

This is because the droplet resource will not attempt to modify an existing virtual machine, for all the reasons we've discussed. Instead, it will delete the instance and create a new one from scratch. This means that our machine is fresh, completely blank, and unmodified.

This could be frustrating if we had saved a bunch of stuff on that machine. If, instead of doing that, we just accept that our whole machine will be destroyed periodically, then we have the closest thing in existence to a precisely known machine image, because we fully record the steps to create the machine, and execute those steps each time to create it.

If we extend this idea to our infrastructure, we can do things like:

-

Rotate the SSH keys on our boxes any time we like

-

Use a different SSH key for every box

-

Swap the OS image or modify our runtime environment whenever we need to

This gives us a lot of freedom to change things that we didn't previously have.

Cleaning up

Imagine yourself, messing around with dozens of clusters. You're so cool, you're spinning them up like nobody's business, trying out GPUs and storage drivers and all kinds of fun stuff. Only problem is, they're expensive! How do you clean up after yourself in a sane way?

With every other tool in existence, you get to clean it up yourself!

-

If you used Ansible, you get to write a destroy playbook.

-

If you wrote a script, you're now writing a destroy script.

-

If you did it by hand through the web UI, here are the keys to the building and make sure to lock up when you're done.

Terraform, on the other hand, already knows what exists and how it would delete

it. All it needs is to be told that you no longer want your resources anymore,

with the destroy subcommand:

terraform destroy$ terraform destroy

tls_private_key.app: Refreshing state... [id=d680d387c543c08d6d015ad893164bf97a6bf495]

digitalocean_ssh_key.app: Refreshing state... [id=38310647]

digitalocean_droplet.app: Refreshing state... [id=355414489]

...

...

...

Plan: 0 to add, 0 to change, 3 to destroy.

Do you really want to destroy all resources?

Terraform will destroy all your managed infrastructure, as shown above.

There is no undo. Only 'yes' will be accepted to confirm.

Enter a value: yes

digitalocean_droplet.app: Destroying... [id=355414489]

digitalocean_droplet.app: Still destroying... [id=355414489, 10s elapsed]

digitalocean_droplet.app: Still destroying... [id=355414489, 20s elapsed]

digitalocean_droplet.app: Destruction complete after 21s

digitalocean_ssh_key.app: Destroying... [id=38310647]

digitalocean_ssh_key.app: Destruction complete after 1s

tls_private_key.app: Destroying... [id=d680d387c543c08d6d015ad893164bf97a6bf495]

tls_private_key.app: Destruction complete after 0s

Destroy complete! Resources: 3 destroyed.And that's it! All of your resources are gone, and you don't need to wonder if you're going to get billed next month for things you forgot to delete.

Summary

Because every resource is created via a declarative specification that is committed to version control, Terraform deployments are inherently self-documenting. There is no question as to what you deployed, when, and how.

Because Terraform knows how to resolve differences between states, there is also no question about what changes would be required to move from one state to another, and there is always a path to do so, via Terraform.

It's difficult to overstate how valuable this is. Terraform makes past, present, and future infrastructure states representable and gives you a complete, executable view of your systems, allowing you to understand, modify, and even reproduce systems in exquisite detail.

I can't imagine working on infrastructure without Terraform. It enables my work in a way that no other tool has up to this point, and it is the glue that holds all the rest of my tools, projects, and projects together.

If you haven't tried Terraform, I highly recommend it.